Attempting an assembly of our geoduck metagenomics HiSeqX data using Megahit (v.1.1.4) on a Mox node.

Assembly was run using default settings.

Assembly coverage was assessed using the following BBmap (v.38.34) scripts:

bbwrap.sh(alignments)pileup.sh(coverage)

SBATCH script is linked here and pasted in full below:

#!/bin/bash

## Job Name

#SBATCH --job-name=megahit

## Allocation Definition

#SBATCH --account=srlab

#SBATCH --partition=srlab

## Resources

## Nodes

#SBATCH --nodes=1

## Walltime (days-hours:minutes:seconds format)

#SBATCH --time=5-00:00:00

## Memory per node

#SBATCH --mem=500G

##turn on e-mail notification

#SBATCH --mail-type=ALL

#SBATCH --mail-user=samwhite@uw.edu

## Specify the working directory for this job

#SBATCH --workdir=/gscratch/scrubbed/samwhite/outputs/20190102_metagenomics_geo_megahit

# Load Python Mox module for Python module availability

module load intel-python3_2017

# Load Open MPI module for parallel, multi-node processing

module load icc_19-ompi_3.1.2

# SegFault fix?

export THREADS_DAEMON_MODEL=1

# Document programs in PATH (primarily for program version ID)

date >> system_path.log

echo "" >> system_path.log

echo "System PATH for $SLURM_JOB_ID" >> system_path.log

echo "" >> system_path.log

printf "%0.s-" {1..10} >> system_path.log

echo ${PATH} | tr : \\n >> system_path.log

# variables

fastq_dir=/gscratch/srlab/sam/data/metagenomics/P_generosa

megahit=/gscratch/srlab/programs/megahit_v1.1.4_LINUX_CPUONLY_x86_64-bin/megahit

bbmap_dir=/gscratch/srlab/programs/bbmap_38.34

## Inititalize arrays

fastq_array_R1=()

fastq_array_R2=()

# Create array of fastq R1 files

for fastq in ${fastq_dir}/*R1*.gz; do

fastq_array_R1+=(${fastq})

done

# Create array of fastq R2 files

for fastq in ${fastq_dir}/*R2*.gz; do

fastq_array_R2+=(${fastq})

done

# Create comma-separated list of input files

R1_fastq_list=$(IFS=,; echo "${fastq_array_R1[*]}")

R2_fastq_list=$(IFS=,; echo "${fastq_array_R2[*]}")

# Run Megahit using paired-end reads

${megahit} \

-1 ${R1_fastq_list} \

-2 ${R2_fastq_list} \

--num-cpu-threads 28

# Determine coverage

## Align reads with BBmap BBwrap

${bbmap_dir}/bbwrap.sh \

ref=megahit_out/final.contigs.fa \

in1=${R1_fastq_list} \

in2=${R2_fastq_list} \

out=aln.sam.gz

## Output contig coverage

${bbmap_dir}/pileup.sh \

in=aln.sam.gz \

out=coverage.txtRESULTS

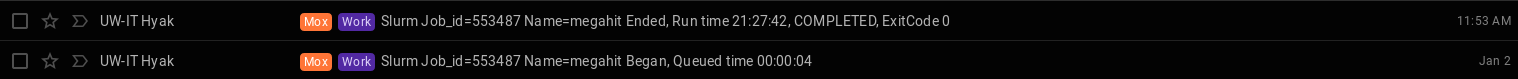

This took a little less than a day to run:

Output folder:

Assembly (FastA):

Alignment (Gzipped SAM):

Coverage file (txt):

This assembly yielded 2,276,153 contigs (grep -c ">" final.contigs.fa).

Here’re some alignment stats from the BBmmap pileup.sh command (can be found at end of the slurm file (text)):

Reads: 163236894

Mapped reads: 143703254

Mapped bases: 16437148607

Ref scaffolds: 2276153

Ref bases: 2243059881

Percent mapped: 88.034

Percent proper pairs: 76.768

Average coverage: 7.328

Standard deviation: 27.892

Percent scaffolds with any coverage: 70.54

Percent of reference bases covered: 56.07Also, ran Quast to assess assembly:

python \

/home/sam/software/quast-4.5/quast.py \

--threads=20 \

--min-contig=100 \

--labels=meta_megahit_assembly \

/mnt/gannet/Atumefaciens/20190102_metagenomics_geo_megahit/megahit_out/final.contigs.faQuast output folder:

Quast report (HTML):

Quast report (text):

Quast report.txt:

All statistics are based on contigs of size >= 100 bp, unless otherwise noted (e.g., "# contigs (>= 0 bp)" and "Total length (>= 0 bp)" include all contigs).

Assembly meta_megahit_assembly

# contigs (>= 0 bp) 2276153

# contigs (>= 1000 bp) 496414

# contigs (>= 5000 bp) 41075

# contigs (>= 10000 bp) 12255

# contigs (>= 25000 bp) 2797

# contigs (>= 50000 bp) 864

Total length (>= 0 bp) 2243059881

Total length (>= 1000 bp) 1342986697

Total length (>= 5000 bp) 487449312

Total length (>= 10000 bp) 293688660

Total length (>= 25000 bp) 154694058

Total length (>= 50000 bp) 89125138

# contigs 2276153

Largest contig 2035937

Total length 2243059881

GC (%) 39.32

N50 1413

N75 624

L50 308641

L75 932685

# N's per 100 kbp 0.00

Now, should probably BLASTn, identify ORFs, translate and BLASTp those ORFs.